Imaging Analysts

How bringing onboard multimodality trained personnel can impact oncology trials.

1. (On and Offsite Image Reads)

2. (Effective Management of the Independent Imaging Review Process)

3. (Standardizing Tumor Assessment Criteria)

Clinical trials that assess the efficacy of cancer therapies are becoming increasingly dependent on imaging techniques as surrogate endpoints for the evaluation of treatment response. Patients with malignancy typically present with lesions that require serial magnetic resonance imaging (MRI), computed tomography (CT), and/or positron emission tomography (PET) scans for evaluation and follow-up. Treatment decisions, particularly in reference to longitudinal assessments, are based on the information in these scans.

JOHN FOXX/STOCKBYTE/GETTY IMAGES

In evaluating response to cancer therapy, it is important to determine whether a lesion is stable, responding to treatment, or progressing (growing), and if so, at what rate. However, the current state of practice at many institutions does not typically include accurate, quantitative, longitudinal measurements provided in a centralized, reliable, organized, and reproducible manner. Frequently, the existing workflows are inefficient and cumbersome, resulting in an unproductive use of the oncologist's, radiologist's, and their staffs' time.

A centralized image analysis service can provide increased reliability and consistency, thereby attaining greater confidence levels in data generated. However, the added cost of a central review can potentially limit trial investigators from utilizing this service. By employing a team of highly trained imaging analysts (IAs) to provide preliminary measurements for clinical trial assessment, centralized image services can improve efficiencies to reduce cost and turnaround time.

Imaging assessment issues

Response assessment criteria were established to add uniformity to radiologic evaluation.1 While these guidelines have significantly improved the quality of clinical trial data, they have also presented a number of challenges to those responsible for the evaluation of cancer therapies. One of the main challenges is obtaining consistent data.

Numerous issues can arise when applying response assessment guidelines. For example, the interpretation of radiologic scans can be subjective, with reviewers varying in their methods of identifying target lesions or performing lesion measurements. Each lesion measurement is dependent on which diameter a particular reviewer chooses; a task that is often completed without direct reference to what was measured on prior scans for that patient. Certain circumstances, such as the merging of two lesions into one, can further confound the results. Therefore, inter-rater reliability across different reviewers is generally low. Additionally, measurement tools are not always consistent between clinical reading platforms, which can increase the degree of variability across imaging studies.

The lack of standardized training for radiologists and trial staff can also adversely impact data quality. Although many institutions have begun to offer basic seminars on data compliance and study management, this training is not generally required by the clinical research industry. Additionally, very few sites and sponsors offer response assessment criteria training for imaging reviewers. Instead, the skills that are needed to conduct a well-organized and controlled clinical research study are usually learned through trial and error, with many problems going unnoticed until the data collection has concluded. Further compounding the issue is the frequent turnover of study staff, which is a common issue among investigational sites. Without sufficient training programs, the influx of new personnel can be detrimental to the integrity of the data and the reliability of the study outcome.

Another challenge for investigators is the absence of an image-based longitudinal record. Typically, documentation of the radiological measurements is limited to paper records. As a result, sites frequently lack a readily available measurement assessment record for trial audits. Therefore, when a regulatory auditor reviews the study progress, additional time and effort is required to collect the records of imaging data at each site, which can be costly and time-consuming for both sponsors and trial staff.

Centralized imaging core

A centralized imaging core can provide many advantages to sponsors and investigational sites. By utilizing this expertise, investigators can optimize the imaging portion of their protocols through the selection of the most advantageous imaging modalities and response evaluation criteria. Imaging cores also offer advanced imaging analysis; data collection and data reporting technologies, such as 3D advanced visualization workstations; secure digital media transfer software; and Electronic Data Capture (EDC) systems, which offer flexible alternatives for data management.

By providing the most advanced technological solutions in a quality controlled environment, imaging cores can more effectively manage and monitor the results of multiple ongoing trials. Another asset to investigators is the regulatory affairs expertise available at many imaging cores. From study design consultation to data analysis and management, imaging cores are well-versed in current industry techniques and standards to ensure study compliance. Investigators can utilize this knowledge to strengthen the study protocol design and improve the integrity of the data.

Additionally, imaging cores can greatly improve the efficiency of radiology assessment by increasing the measurement consistency across reviewers and investigational sites. A central review by a limited number of trained reviewers serves to lessen measurement variability. Bias may also be reduced because the imaging core does not have information regarding treatment assignment. A centralized imaging core can thereby provide greater confidence, increase statistical power, and improve the ability to detect treatment response to therapy for clinical trials.

Centralized challenges

Centralized imaging cores face a myriad of challenges. Off-site centralized radiology review is usually done retrospectively, prohibiting the replacement of missing or discrepant scans. Because of this delay, the on-site radiologist and oncologist are responsible for study enrollment and treatment decisions. Discrepancies between on-site and off-site reviews on issues such as eligibility may potentially alter the study outcome. Furthermore, the added cost of a central review can potentially deter investigators from utilizing this valuable service. For this reason, imaging cores must find creative ways to improve workflow efficiencies to reduce the cost and turnaround time, thereby making the service more affordable to smaller pharma companies and national clinical research groups.

The analyst's role

By utilizing imaging analysts to perform preliminary measurements of lesions on MRI, CT, and PET scans, a radiologist's assessment time can be more efficiently utilized. However, in order to optimize the effectiveness of imaging analysts, team members must have a working knowledge of anatomy, experience in cross-sectional imaging, and undergo extensive instruction in clinical research principles. In addition to training in International Conference on Harmonization (ICH), Good Clinical Practice (GCP), the Health Insurance Portability and Accountability Act (HIPAA), and FDA guidelines, imaging analysts receive training in each protocol's imaging response assessment criteria. Training topics include Response Evaluation Criteria in Solid Tumors (RECIST and RECIST 1.1),2,3 World Health Organization (WHO),4 International Working Group Response Criteria (IWC)5 for Non-Hodgkin's Lymphomas, Standardized Uptake Values (SUV), and the European Organization for Research and Treatment of Cancer (EORTC) criteria6-8 for PET assessment, as well as volumetric measurement.9 With this knowledge, IAs are able to promote compliance issues and assist radiologists and investigators to ensure the integrity of data analysis and results reporting.

Figure 1

Additionally, IAs can potentially address administrative issues that can delay study progress. With expertise in image analysis applications and radiology information systems, IAs can help trial staff resolve data collection issues such as discrepant exam types or incompatible data formats prior to radiology review and acquiring the image studies from the institution's PACS (Picture Archiving and Communication System). IAs can also serve as a valuable resource to radiologists by helping alleviate common issues that may inhibit them from effectively balancing the demands of their clinical and research workloads. By helping to manage the radiologists' trial workload and review schedule, and functioning as a point-of-contact between the radiologists and trial staff, a well-trained and knowledgeable IA team can help facilitate the clinical trial process.

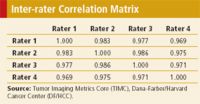

Table 1. The interclass correlation coefficients between each of the four raters.

Inter-rater reliability

In clinical trials, the validity of the study conclusion is limited by the reliability of the outcome that is measured. For this reason, it is essential for reviewers to undergo comprehensive reliability assessment measures such as inter-rater reliability prior to study commencement to verify measurement consistency and ensure a high level of agreement across reviewers.

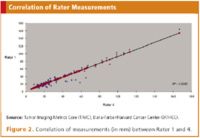

The Tumor Imaging Metrics Core (TIMC; www.tumormetrics.org), which is the on-site centralized imaging core for the Dana-Farber/Harvard Cancer Center, conducted a study to evaluate the inter-variability of RECIST measurements between four multimodality-trained IAs, and assess the impact of preliminary measurements on workflow and quality control. The IAs, blinded to clinical assessments, independently selected and measured the longest diameter of 186 lesions from 20 CT scans (see Figure 1). The CT scans included a variety of diseases, including gastrointestinal stromal tumor, gynecological, breast, and lung malignancies. After completion by all four IAs, the measurements were aggregated and analyzed with the statistical analysis software package SPSS 16.0. The results of this study show high agreement between the four IAs (see Table 1). Figure 2 shows the correlation of measurements between Rater 1 and Rater 4. Furthermore, only 5.4% of the measurements needed to be revised following radiologist review, reducing radiologist assessment time by greater than 40%.

Figure 2

Due to the aforementioned benefits, the preliminary assessment of radiological studies by IAs affords centralized imaging cores, such as the TIMC, the ability to increase their trial enrollment capacity. From 2007 until 2009, TIMC's core annual scan volume increased by over 500%.

Conclusion

Centralized imaging cores provide an essential service to multi-institutional clinical trials. Trials involve substantial amounts of data that are subject to intensive monitoring. By providing regulatory, medical, and technical expertise, imaging cores can assist sites in the effective management of many aspects of clinical trial research, enabling investigators to advance protocol planning, standardize data collection and assessment, and improve study compliance.

Furthermore, utilizing multimodality trained IAs to perform preliminary radiological measurements for oncology clinical trials and establishing quality control protocols in imaging core laboratories produces the following benefits: variability across reviewers and time points is reduced; consistency and accuracy of measurements according to the clinical trial protocol are increased; and cost-efficiencies and turnaround time are improved. These benefits result in improved quality for radiological assessments of tumor measurements and a more focused use of imaging modalities as predictive and prognostic biomarkers in clinical research.

Authors' note: The authors would like to acknowlede the support provided by the Dana-Farber/Harvard Cancer Center Comprehensive Cancer Center grant NCI 5P30 CA006516-41.

Editor's note: A form of this article appeared in our June 2009 Oncology & Clinical Trials in the 21st Century supplement. It subsequently went through the peer-review process.

Trinity Urban is the Lead Imaging Analyst, Robert L. Zondervan is an Imaging Analyst, William B. Hanlon is Project Manager, all for the Dana-Farber/Harvard Cancer Center Tumor Imaging Metrics Core, 44 Binney Street, Boston, MA 02115. Gordon J. Harris, PhD, is the Director of the Massachusetts General Hospital, 3D Imaging Laboratory and Co-Director of the DF/HCC TIMC. Matthew A. Barish, MD, is an Assistant Professor of Clinical Radiology at Stony Brook University Medical Center and former Co-Director of the DF/HCC TIMC. George R. Oliveira, MD, is a Clinical Fellow at the University of Washington. Annick D. Van den Abbeele, MD,* is the Chief of the Department of Imaging at Dana-Farber Cancer Institute and the Co-Director of the DF/HCC TIMC, email: abbeele@dfci.harvard.edu.

*To whom all correspondence should be addressed.

References

1. A.B. Miller, B. Hoogstraten, M. Staquet et al., "Reporting Results of Cancer Treatment," Cancer, 41, 207-214 (1981).

2. P. Therasse, S.G. Arbuck, E.A. Eisenhauer et al., "New Guidelines to Evaluate the Response to Treatment in Solid Tumors," Journal of the National Cancer Institute, 92, 205-216 (2000).

3. E.A. Eisenhauer, P. Therasse, J. Bogaerts et al., "New Response Evaluation Criteria in Solid Tumours: Revised RECIST Guideline (version 1.1)," European Journal of Cancer, 2, 228-247 (2009).

4. World Health Organization, Handbook for Reporting Results of Cancer Treatment, Offset Publication No. 48 (Geneva, 1979).

5. B.D. Cheson, S.J. Horning, B. Coiffier et al., "IWC: Report of an International Workshop to Standardize Response Criteria for Non-Hodgkin's Lymphomas," Journal of Clinical Oncology, 17, 1244-1253 (1999).

6. L. Shankar, "Standardization of PET Imaging in Clinical Trials," PET Clinics, 3, 1-4 (2008).

7. H. Young, R. Baum, U. Cremerius et al., "Measurement of Clinical and Subclinical Tumour Response using [18F]-Fluorodeoxyglucose and Positron Emission Tomography: Review and 1999 EORTC Recommendations," European Organization for Research and Treatment of Cancer (EORTC) PET Study Group, European Journal of Cancer, 35 (13) 1773-1782 (1999).

8. L.K. Shankar, J.M. Hoffman, S. Bacharach et al., "Consensus Recommendations for the use of 18F-FDG PET as an Indicator of Therapeutic Response in Patients in National Cancer Institute Trials, Journal of Nuclear Medicine, 47, 1059-1066 (2006).

9. A.G. Sorensen, S. Patel, C. Harmath et al., "Comparison of Diameter and Perimeter Methods for Tumor Volume Calculation," Journal of Clinical Oncology, 19, 551-557 (2001).