Oversight Method Identifies Critical Errors Missed by Traditional Monitoring Approaches

Study Health Check provides early identification of issues related to protocol deviations and determines objective measures of site-specific versus study-wide performance.

Incorporating Quality by Design (QbD) principles and practices is central to the ongoing effort in clinical trial development and execution to maximize subject safety, data integrity, efficiency, and cost-effectiveness as trials are modernized and adapt to a rapidly changing current landscape.1,2 Risk-based quality management (RBQM) is intended to ensure that critical to quality errors that are not eliminated by trial design are identified and corrected as quickly as possible during study conduct. Potentially overlooked in these activities is the critical step to generate actual evidence of oversight effectiveness, or quality assurance (QA),3 leaving trials vulnerable. In a recent, high-profile example, events surrounding the VALOR vaccine study highlight the extent to which systemic failures can be highly detrimental if left unchecked.4

We have developed an independent and objective, focused, protocol-specific analytical “Study Health Check” to directly evaluate and document the performance of monitoring activities and help minimize this important quality risk. This analysis is part of our practice and offerings at MANA RBM conducted using our proprietary methods and analytics software platform, UNIFIER™. The analysis aims to provide rapid, independent, and objective assessment of monitoring quality in protocol-specific, high-risk, areas that can be conducted at any point during, continuously throughout, or after a study is complete for benchmarking purposes or in preparation for regulatory inspections.

Here, we present results from one such Study Health Check made in three key protocol areas critical to trial success and integrity: Primary Endpoint, Subject Safety, and Investigational Product. In this instance, our Study Health Check found systematic, high-frequency protocol errors that were not recorded as major deviations during conduct and therefore, went largely unrecognized and often, not fully resolved.

Consequently, up to 41% of subjects in this example may be directly unevaluable for the primary endpoint using the original Intent to Treat (ITT) definition and up to 23% using a modified ITT (mITT).

From this analysis and others, we assert that incorporation of improved QA practices that includes independent, protocol-specific assessments of monitoring effectiveness, ideally performed throughout a study, will proactively mitigate this important and under-appreciated trial risk, and provide increased confidence in results. Our Study Health Check is a rapid, efficient, and cost-effective tool that meets this need.

Trial, monitoring plan, and Study Health Check overview

The trial was an investigator-initiated, Phase IV, interventional study for a metabolic illness. The study took place in the critical care, emergency department hospital setting with a planned duration of 24 months and an estimated enrollment of 300 subjects. Subjects meeting eligibility criteria were randomly assigned IP or placebo given as two doses (t = 0, t = 24 h) with a safety-related ECG at four hours and serum chemistry values collected at six hours for the Primary Endpoint assessment.

The Study Health Check for this trial was based on automated detection of major deviations from protocol-prescribed processes in the three critical areas listed above. Protocol deviations are common, affect a high proportion of subjects, and are increasing in frequency.5 Important protocol deviations are “a subset of protocol deviations that may significantly impact the completeness, accuracy, and/or reliability of key study data or that may significantly affect a subject’s rights, safety, or well-being.”6 Thus, they are a direct measure of trial quality. Oversight quality was also evaluated directly by comparing the results of automated detection of the important protocol deviations in the selected high-risk areas versus those found and recorded manually by the monitor(s).

The traditional risk-based monitoring approach used during the study consisted of three parts: weekly, remote review of high-risk data, monthly central monitoring review of findings, and a triggered, external central monitoring review. Remote review of high-risk data was conducted using an automated, listing of computationally detected, protocol-specific discrepancies in critical data, updated weekly, and provided to the monitor for manual review and determination of major protocol deviations. Within the output, the report specifically contained a listing table of each subject with missing, expected primary endpoint and safety assessments (and when they occurred) and any subjects missing one or more IP doses. If the monitor determined that the missing value(s) constituted a protocol deviation, it was to be manually entered in the recording system. The expectation was that the deviations entry process would occur monthly, prior to the internal central monitoring review of results by the CRO. No on-site monitoring was performed due to COVID-19. An external central monitoring review was triggered after six months by reaching 10% of the expected enrollment. The external central monitoring review focused on quality areas other than missing endpoint, safety, and IP, so as not to duplicate efforts and its detailed results are not presented here (e.g., AE rates, queries, data entry delays, etc.).

The trial took place during the pandemic and faced changing, disease-specific management protocols, leading to reduced enrollment rates. Therefore, trial enrollment was extended, and after 28 months, the Study Health Check was conducted in preparation for close-out. The Check computationally analyzed the same listings provided to the monitor and summarized all potential deviations for comparison with the deviations entered in the recording system. Only deviations associated with subjects having a status of “Completed” were compared to eliminate confounding temporal factors associated with a subject’s progression through the study. A cross-check of differences observed between entered deviations having a status of “Confirmed” and those detected during the Study Health Check was also made to identify any underlying mitigating explanation for a difference (e.g., a recorded deviation later corrected via updated information from a site but not yet marked as “Not Confirmed”).

Study Health Check results

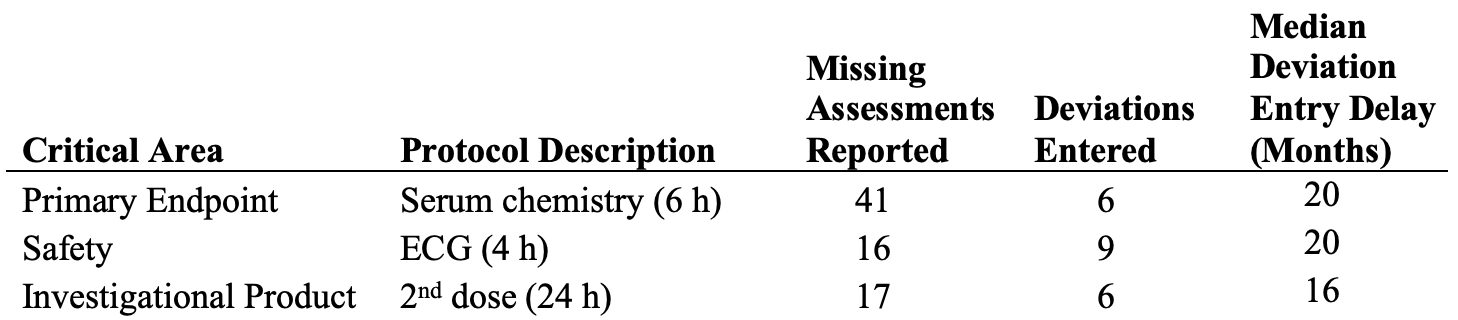

A total of 13 sites accounted for a study-wide total of 99 “Completed” subjects after 28 months of enrollment (Table 1). These subjects contributed to the listing analysis comparison made against the 64 deviations entered. Notably, for the three critical areas of Primary Endpoint, Safety, and IP dosing we examined, only 28% (21 of 74) of the total potential deviations provided in the listing report had been entered (Table 1). All were listed as “Confirmed” but had been recorded as “Minor”, despite being major protocol errors. Deviation entry completion (# Reported / # Entered) was ranked as safety (56%) > IP (35%) > primary endpoint (15%). These low completion rates were consistent with the external central monitoring result that no deviations had been entered after the initial six months of study enrollment. A follow-up analysis using audit trail data indicated that substantial time passed between the occurrence of the deviation and when it was ultimately entered (median range 16 to 20 months) (Table 1).

Table 1. Major protocol deviations detected in three critical study areas

Major protocol deviations detected computationally in three critical study areas that were reported to the sponsor versus those entered in the tracking system after 28 months of study enrollment (n = 99 subjects with a status of “Completed”) among 13 sites. Protocol-specific assessment time points relative to the first dose of study drug are given in parentheses. Deviation entry delay was measured from the time the deviation occurred to when it was recorded in the tracking system.

Source: Analysis of Study Health Check data, June 2023

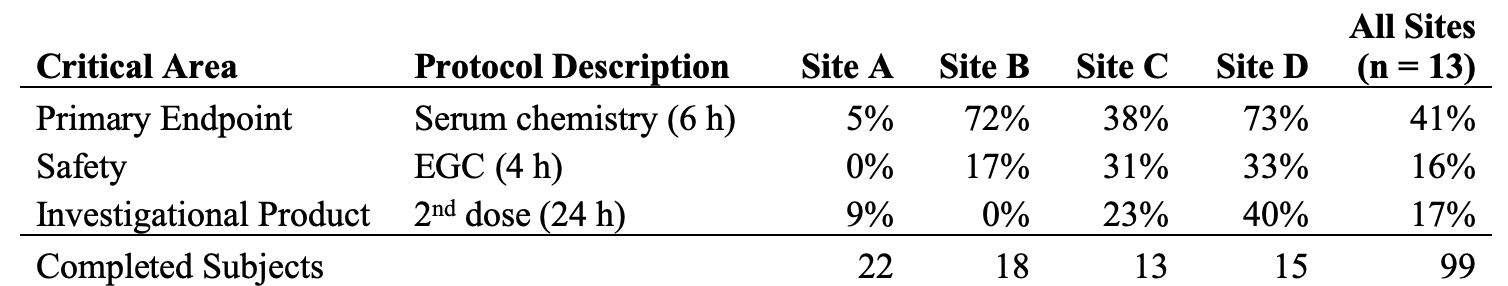

Table 2. Missing assessments in critical study areas

Percent of missing assessments in three critical study areas for sites with 10 or more completed subjects, for all sites combined, and the count of Completed subjects after 28 months of study enrollment. Assessment time points relative to the first dose of study drug are given in parentheses.

Source: Analysis of Study Health Check data, June 2023

Study-wide, 41% of completed subjects were missing their primary endpoint assessment versus only 16% and 17% having a missing safety measurement or IP dose, respectively (Table 2). The majority of completed subjects (68%) were enrolled at four sites that we analyzed further to identify patterns among sites. Only one of these sites had missing assessment/IP dosing error rates of < 10% (Site “A”). Two of the sites (“C” and “D”) were especially poor performers and > 20% of their completed subjects were missing key data in each area evaluated (range 23% to 73%) (Table 2). Notably, two sites (“B” and “D”) were missing nearly three quarters of their primary endpoint assessments.

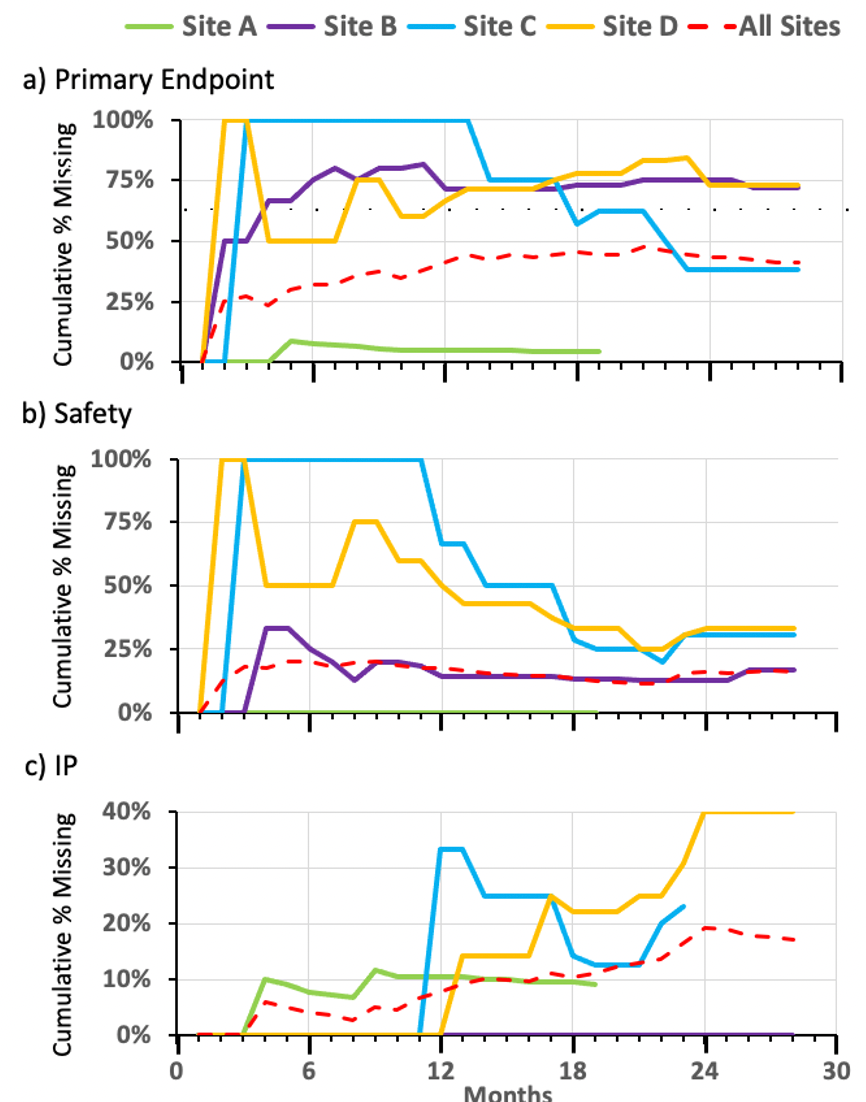

Assessment of the percentage of missing primary endpoint and missing safety assessments per completed subjects over time indicated that problems typically arose at sites within the first six months of enrollment and contrasted with the IP deviations that tended to occur later in the study. Mitigation of missing safety assessments through contact with the sites appeared generally effective (Figure 1b), whereas sites had mixed success at reducing missed Primary Endpoint assessments (Figure 1a – compare Site “C to Site “D”) and any attempted remediation of missing IP doses did not curtail the study-wide rise in their occurrence (Figure 1c).

Figure 1a, b, c. Assessment of the percentage of missing criteria per completed subjects over time

Accumulation of missing assessments or dosing in critical study areas at sites with ten or more completed subjects (Sites A – D) as of January 2023 for: a) Primary Endpoint (serum chemistry at 6 h), b) Safety (EGC at 4 h), and c) Investigational Product (IP) (2nd dose at 24 h). Cumulative percent was calculated as the total number of missing assessments divided by the total number of completed subjects at each month. Completed subjects n = 99 at the end of January 2023 among 13 sites with enrollment.

Source: Analysis of Study Health Check data, June 2023

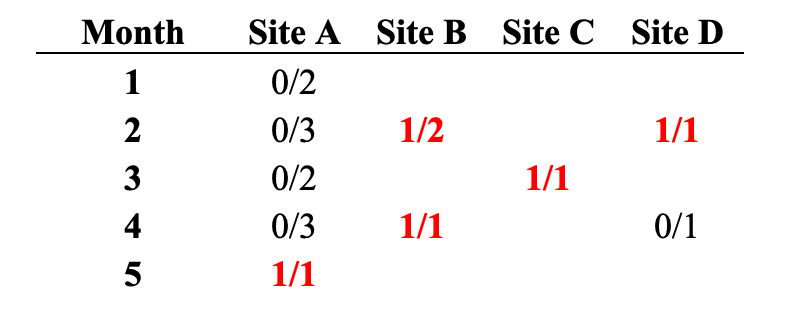

Direct counts of the number of missing values for the primary endpoint assessment confirmed that systematic errors occurred early during trial conduct (Table 3). Three of the four sites with the greatest number of completed subjects missed the primary endpoint assessment for their very first subject (Table 3). Within five months of study enrollment, all four of these sites had at least one missed primary endpoint assessment and the problem was recurrent for each completed subject at Site “B” (Table 3).

Table 3. Analysis of site’s missing primary endpoint assessment

Counts of missing primary endpoints per Completed subjects for sites with 10 or more Completed subjects in the first five months of study enrollment.

Source: Analysis of Study Health Check data, June 2023

Implications for central monitoring

Knowing the quality of trial oversight is critical to the success of QbD/RBQM approaches and confirming that the QC processes established are working as intended is integral to QA. However, demonstrable evidence of oversight quality is often unavailable, overlooked or not addressed until issues with a trial arise. Our Study Health Check provides an independent and objective, quick and quantitative assessment of both trial quality and the effectiveness of monitoring to address this QA shortcoming, help meet the risk-based GCP guidelines established within ICH E6(R2)7 and forthcoming in E6(R3),8 and maximize overall confidence in trial quality.

Applying this check to a recent trial as described here and results from our previous work9 provides a cautionary tale to sponsors and CROs regarding the quality of monitoring activities in a landscape fraught with potential pitfalls and filled with examples of failures (where they have been uncovered and subsequently reported). Among the consequences of quality failure in this specific case was the loss of up to 23% to 41% of subjects (mITT – initial ITT) directly evaluable for the primary endpoint because the key data required by the protocol were consistently not collected by sites having high enrollment and the extent of this critical error was not recognized until late in the trial due to deficiencies in deviation confirmation and recording. Subjects were also directly affected by missing safety assessments at a trial-wide rate of 16% and at two sites, more than 30% of the time.

Industry self-assessments10,11 and global regulatory inspection findings12-14 suggest that under-utilization of RBQM approaches and failures of the monitoring process itself are commonplace. Failure to follow the investigational plan continues to be the most common cause of findings in FDA inspections of investigators and failure to adequately monitor trial conduct is still the leading cause of sponsor/CRO findings.15 Thus, insufficient, or poor, monitoring practices that lead to overall loss in trial quality persist, despite ample guidance. Findings based on monitoring quality failures, including lack of demonstrating appropriate oversight, can result in further censure of research subjects in the analysis dataset, posing a significant risk for sponsors with marginal efficacy results or a limited number of assets.

Study Health Check is a further refinement of our initial work comparing Risk-Based Monitoring (RBM) with traditional Source Document Verification (SDV) where we showed that our overall technology-enabled protocol-specific RBM approach was superior to SDV in finding safety reporting issues, critical systematic errors related to primary endpoint, IP issues, and resulted in reduced senior management oversight time in a vaccine trial.9 This earlier study was the first demonstration that the Study Health Check approach is effective and efficient for detecting important errors, a conclusion supported further by the current example.

Importantly, Study Health Check differs from common RBQM methods because it uses protocol-specific analytics rather than non-specific KRIs or statistical outlier approaches. It is focused on the most critical aspects of risk in an individual trial, making its study-specific analytics an essential feature. Non-specific, general indicators do not provide the sensitivity and specificity that Study Health Check can deliver. For example, had the Study Health Check been performed after the first five to six subjects were completed, the risk to trial conduct could have been recognized when there were too few datapoints for reliable trend detection by typical KRI or statistical monitoring approaches. This illustrates why the Study Health Check can be superior to and is an important complement to other central oversight methods.

Our systematic, automated identification of major (important) deviations used in this example is superior to manual processes and delivers improved consistency for subsequent assessments, including trending9. In this study, despite being provided with the listing of the potential deviations, monitors did not identify and record them promptly, did not categorize them correctly, and created combined entries that masked the actual deviation rates, despite following a “risk-based monitoring” approach. This meant that systematic errors and differences in site performance in areas crucial to quality were not recognized, even though data summaries and visualizations within UNIFIER™ are designed to assist with trend detection.

Significant under-reporting, through missed detection or incorrect categorization, and delayed reporting of important protocol deviations can have profound, negative impacts on monitoring quality and is a specific critical risk for central monitoring in which early detection of trends is paramount to timely remediation action. If central monitoring groups use manually identified deviations or do not use deviations at all while the trial is active, they may miss critical study conduct errors and opportunities to improve trial quality at both site and study levels in a timely fashion, as occurred in this example. Additionally, in this instance, a direct comparison of the deviations detected and then reported could not made during the triggered, external central monitoring review because of their absence. Though the delay in entry was noted, follow-up actions by the internal team were not taken to ensure this finding was resolved. Ultimately, it was most common for the important deviations to remain unrecorded until 20 months after their occurrence, significantly compromising study quality and the ability to detect important quality trends.

Early identification of issues related to protocol deviations can help identify if they are site-specific versus study-wide, providing a more objective measure of performance. They help guide sponsors/CROs to determine the feasibility of the protocol design and study procedures and whether changes are warranted. For example, in this example, most IP and safety deviations were site research staff dependent, whereas missing primary endpoint assessments typically were not—if the patient left the emergency department by choice or by following institutional guidelines designed to reduce the spread of COVID-19, there was simply no way to collect the assessment and adhere to the study protocol. In the ever-changing landscape of medical care, early identification of systematic issues that might impact projected study timelines can facilitate protocol amendments necessary to meet deadlines and not exceed study budgets and improve the overall protection of human subjects.

Our example provides Study Health Check results from one timepoint during trial conduct. This is the absolute minimum to demonstrate quality oversight within critical areas. Whereas the assessment was performed during study close out, an analysis performed during active trial enrollment would have also identified the critical errors and, depending on its timing, could have allowed for modification of the protocol to enable more evaluable subjects. Improved monitor performance could have also been targeted. If Study Health Check is used early in a trial, process issues can be identified, and remedial action taken before errors accumulate. Additionally, by reviewing the trial data and directly measuring oversight quality at multiple timepoints (e.g., monthly central review of programmatically identified, protocol-specific major deviations), continued detection of critical process errors and their swift remediation would significantly improve study quality and further lower trial risk.

Kevin T. Fielman, PhD, MANA RBM, Denver, CO, USA, Insightful Analytics, Durham, NC, USA, Karina M. Soto-Ruiz, MD, Comprehensive Research Associates, Houston, TX, USA, and Penelope K. Manasco, MD, MANA RBM, Denver, CO, USA

Corresponding author: Kevin T. Fielman, PhD, Insightful Analytics, Durham, NC, 27704 USA, kfielman@manarbm.com

References

- Suprin M, Chow A, Pillwein M, et al. Quality Risk Management Framework: Guidance for Successful Implementation of Risk Management in Clinical Development. Therapeutic Innovation & Regulatory Science. 2019;53:36-44. doi:10.1177/2168479018817752

- Meeker-O’Connell A, Stewart A, Glessner C. Can quality management drive evidence generation? Clin Trials. 2022;19(1):112-115. doi:10.1177/17407745211034496

- Manasco P, Bhatt DL. Evaluating the evaluators - Developing evidence of quality oversight effectiveness for clinical trial monitoring: Source data verification, source data review, statistical monitoring, key risk indicators, and direct measure of high risk errors. Contemp Clin Trials. 2022;117:106764. doi:10.1016/j.cct.2022.106764

- Pfizer and Valneva Issue Update on Phase 3 Clinical Trial Evaluating Lyme Disease Vaccine Candidate VLA15 | Pfizer. Accessed March 28, 2023. https://www.pfizer.com/news/announcements/pfizer-and-valneva-issue-update-phase-3-clinical-trial-evaluating-lyme-disease

- Smith Z, Getz K. Incidence of Protocol Deviations and Amendments in Trials is High and Rising. Published online February 24, 2022.

- Galuchie L, Stewart C, Meloni F. Protocol Deviations: A Holistic Approach from Defining to Reporting. Ther Innov Regul Sci. 2021;55(4):733-742. doi:10.1007/s43441-021-00269-w

- ICH Harmonised Guideline: Integrated Addendum to ICH E6(R1): Guideline for Good Clinical Practice E6(R2). Published online November 9, 2016. https://database.ich.org/sites/default/files/E6_R2_Addendum.pdf

- ICH Harmonised Guideline: Good Clinical Practice (GCP) E6(R3) Draft version. Published online May 19, 2023. Accessed June 13, 2023. https://database.ich.org/sites/default/files/ICH_E6%28R3%29_DraftGuideline_2023_0519.pdf

- Manasco G, Pallas M, Kimmel C, et al. Risk-Based Monitoring Versus Source Data Verification. Applied Clinical Trials. 2018;27(11). Accessed February 16, 2023. https://www.appliedclinicaltrialsonline.com/view/risk-based-monitoring-versus-source-data-verification

- Barnes B, Stansbury N, Brown D, et al. Risk-Based Monitoring in Clinical Trials: Past, Present, and Future. Ther Innov Regul Sci. 2021;55(4):899-906. doi:10.1007/s43441-021-00295-8

- Stansbury N, Barnes B, Adams A, et al. Risk-Based Monitoring in Clinical Trials: Increased Adoption Throughout 2020. Ther Innov Regul Sci. 2022;56(3):415-422. doi:10.1007/s43441-022-00387-z

- Garmendia CA, Epnere K, Bhansali N. Research Deviations in FDA-Regulated Clinical Trials: A Cross-Sectional Analysis of FDA Inspection Citations. Ther Innov Regul Sci. 2018;52(5):579-591. doi:10.1177/2168479017751405

- Rogers CA, Ahearn JD, Bartlett MG. Data Integrity in the Pharmaceutical Industry: Analysis of Inspections and Warning Letters Issued by the Bioresearch Monitoring Program Between Fiscal Years 2007-2018. Ther Innov Regul Sci. 2020;54(5):1123-1133. doi:10.1007/s43441-020-00129-z

- Sellers JW, Mihaescu CM, Ayalew K, et al. Descriptive Analysis of Good Clinical Practice Inspection Findings from U.S. Food and Drug Administration and European Medicines Agency. Ther Innov Regul Sci. 2022;56(5):753-764. doi:10.1007/s43441-022-00417-w

- BIMO Inspection Metrics. FDA. Published March 3, 2023. Accessed March 28, 2023. https://www.fda.gov/science-research/clinical-trials-and-human-subject-protection/bimo-inspection-metrics

What Can ClinOps Learn from Pre-Clinical?

August 10th 2021Dr. Hanne Bak, Senior Vice President of Preclinical Manufacturing and Process Development at Regeneron speaks about her role at the company as well as their work with monoclonal antibodies, the regulatory side of manufacturing, and more.

Industry Assessment of Risk-Based Quality Management Emphasizes Value of Adoption

April 4th 2024A study conducted by the Tufts CSDD in collaboration with CluePoints and PwC revealed that slightly more than half of sponsors and contract research organizations have adopted risk-based quality management approaches.